Does the prospect of artificial intelligence make us doubt the authenticity of human intelligence or is it forever a copy or fake version of human intelligence? Give reasons for both arguments. If you have the opportunity watch the film Blade Runner as a fictional account of the problem. Look up the term ‘uncanny valley’ as a prompt to thought.

Preparation

Blade Runner I am familiar with and have watched a number of times. Though I refreshed my memory of the plot in the IMDB synopsis. Essentially it is about how some advanced androids (replicants) developed by humans are rising up (they are slaves on another world) and threatening their creators. They look identical to humans and can only be discovered with a special test – mostly to detect their absence of emotional response. A new prototype android, Rachel, has been developed which is capable of emotions because of implanted memories. She also has a limitless lifespan (or has she?). Some issues raised by the film: what does it mean to be android or human? Could androids take over from humans? How do entities achieve consciousness of self?

‘Uncanny valley’ is a term new to me. I see from a Guardian (Lay: 2015) article that it is ‘a characteristic dip in emotional response that happens when we encounter an entity that is almost, but not quite, human.’ The article explains that the experience is not felt by everyone and the explanation for the phenomenon are uncertain. The uneasiness would not be felt when observing a replicants in Blade Runner because they appear identical to humans. Apparently it is the near-miss that causes the uneasiness.

More that appearance

The issues behind the developments of artificial intelligence are complex and involve far more that visual experience. I was an early avid reader of science fiction, in particular Isaac Asimov’s Three Laws of Robotics, which introduced to me the idea of some of the possibilities and controls that might be needed as technology develops.

More recently I have listened to many Radio 4 programmes which feature discussion about artificial intelligence and its impact both positive, and potentially negative, on humanity.

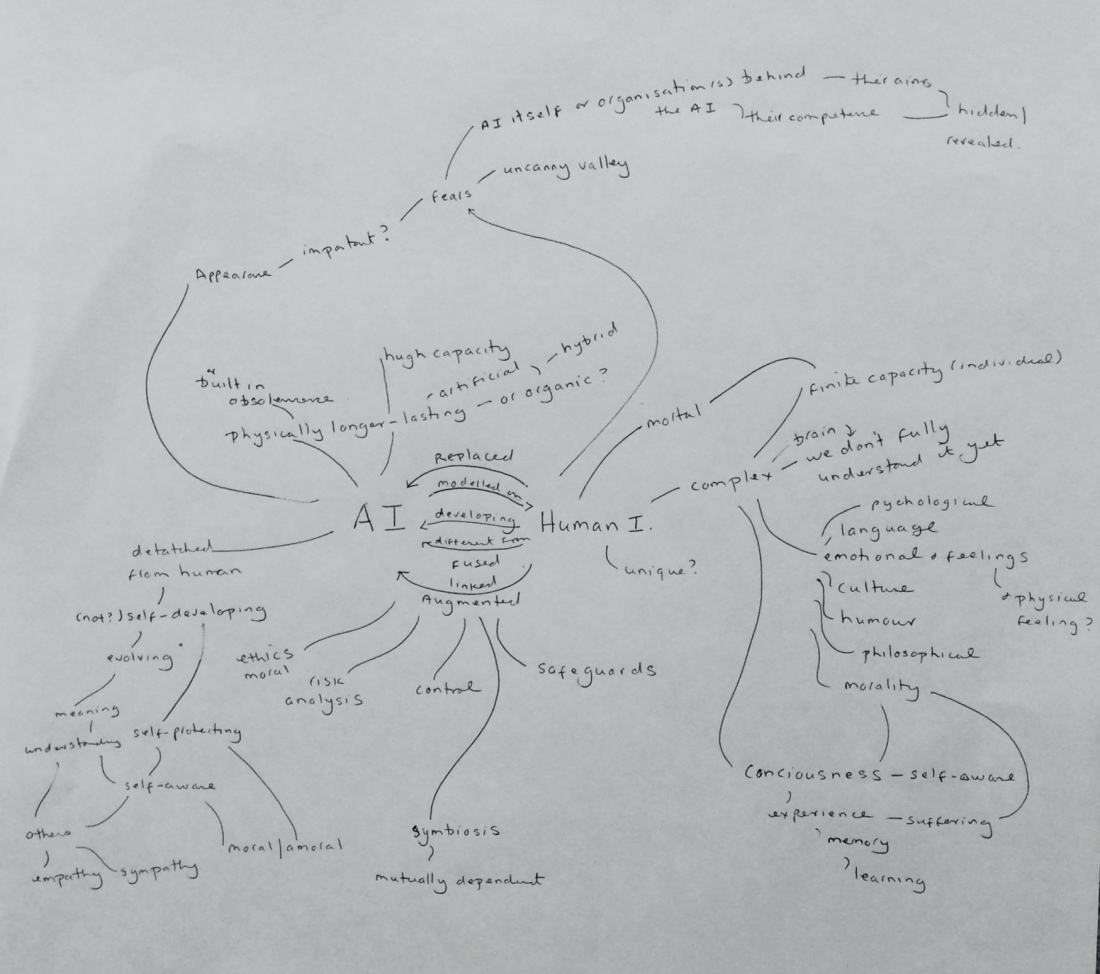

I have linked some of these issues on this mind map.

Argument 1. Artificial intelligence makes us doubt the authenticity of human intelligence

What is meant by ‘the authenticity of human intelligence’? Presumably that artificial intelligence could replace, or at least be equal to, human intelligence. So what is unique or ‘authentic’ about human intelligence? We have:

- Emotional intelligence and feelings

- Humour (is that a feeling?)

- Memories

- Morality

- Mortality – and are awareness of it and of ourselves

Humans are able to form memories; feel emotion; make decisions based on logic combined with morality and ethics. We are aware of our own mortality and our races history. But with developments in artificial intelligence can we be sure that eventually other forms that we have produced artificially won’t be able to achieve these things?

Mortality and emotion – that we have a finite lifespan as individuals and are aware of it and of ourselves – it could also be a feature of artificial intelligence if it was so programmed. How do we know that such beings couldn’t be self-aware and feel emotions? We barely understand how we think and have self-awareness. This may be different in another entity but may serve similar purposes.

Morality – when broken down is a set of rules – mostly culturally developed. This could be programmed into a machine and may even be more consistently applied that we do as individuals who can sometimes put morality aside for the sake of convenience or gain.

Human scientific strides have taken us a long way over the last few hundred years with capabilities in artificial intelligence developed that we would not have believed possible. Who knows what is possible in future and what the benefits and risks might be. Even the late Stephen Hawking said, “The development of full artificial intelligence could spell the end of the human race….It would take off on its own, and re-design itself at an ever increasing rate. Humans, who are limited by slow biological evolution, couldn’t compete, and would be superseded.” (Sulleyman: 2017)

Argument 2. Artificial intelligence will always be a copy or fake version of human intelligence

However, Hawking doesn’t envisage super-humans with all the attributes of that which we consider ‘human’, but another form of intelligence that ‘could grow so powerful it might end up killing humans unintentionally.’ Rather like a genetically engineered virus that might escape a laboratory. One of the risks is not that artificial intelligence will be aggressive or anti-human, but that it will be indifferent.

The uniqueness of humanity, with all its flaws, faults and quirks is the result of years and years of organic and cultural development and change. We have developed mentally, physically and culturally in an imperfect, nuanced, unscientific way. Our decisions are not always logical or moral. Our physical bodies are assaulted daily in ways that affect our DNA and therefore the way that we function. We are endlessly curious. But this messiness is what make us human. We are imperfect.

As we develop artificial intelligence we aim to make it perfect. We can build in logic, memory, safeguards, morality even. Perhaps even eventually emotions. Artificial intelligence may even (probably does) make better decisions than we do – or at least more consistent decisions. But it will always be an approximation of the complexity of humanity whatever it looks like on the outside.

References and bibliography

Analysis – Artificial Intelligence. BBC Podcast. First broadcast 23 February 2015. https://www.bbc.co.uk/programmes/b05372sx

King, B. (2018) Could a Robot be Conscious? Philosophy Now. Issue 125.

Lay, S. (2015). Uncanny valley: why we find human-like robots and dolls so creepy | Stephanie Lay. [online] the Guardian. Available at: https://www.theguardian.com/commentisfree/2015/nov/13/robots-human-uncanny-valley [Accessed 13 Aug. 2018].

Sulleyman, A. (2017). Stephen Hawking has a terrifying warning about AI. [online] The Independent. Available at: https://www.independent.co.uk/life-style/gadgets-and-tech/news/stephen-hawking-artificial-intelligence-fears-ai-will-replace-humans-virus-life-a8034341.html [Accessed 13 Aug. 2018].

Reflection

I found this exercise confusing. I am not sure of the point of it. I may have missed it. I am studying visual culture. ‘Uncanny valley’ refers to a phenomenon about robotic visual appearance. In Blade Runner the artificially intelligent ‘replicants’ have the appearance of humans.

The exercise comes after the section on Simulacrum. I can see how the film The Matrix relates to that as described in the course text and the links to Plato’s Cave but I can’t link it intellectually to this exercise. Perhaps Artificial intelligence as simulacrum – a debased version of the original human intelligence? False, second-hand, deceptive. Use of the term ‘deception’ – robots deceiving us to think they are human. Uncanny value makes us nervous because they are near enough, but not quite, to achieving this accuracy. But – the tests are not about visible accuracy but accuracy of thought – such as Turin test or emotional test as in Blade Runner.

I can see some links to the paragraph following the exercise which discusses ‘model and copy’ so perhaps this exercise is about looking beyond the visual to determine what is ‘authentic’ – in this case about human intelligence. And about our need to ‘take refuge in the past which we nostalgically regard as more real.’

Yet AI is much more than appearance and human-looking robots. It can start with computer algorithms for solving simple problems, through Amazon’s Alexa which speaks like a human (well a bit) but looks like a box, to some of the most sophisticated Japanese machines.

Ever since its genesis, there have been conflicting views and concerns on the potential enhancement or doom that AI can cause to human civilization. While some experts believe that this technology will advance and augment our intelligence, some like Bill Gates have expressed views about how a machine’s intelligence can become strong enough to be a concern.